The resource-constrained nature of edge devices poses unique challenges in meeting strict performance requirements. However, performance benchmarks for deployed models are often run manually and infrequently, and other phases of the development workflow, such as the conversion of high-level languages to C/C++ code, might not be evaluated for performance. While this strategy gives important insights for improvements in the final product, the integration of performance testing throughout the product development process enables early detection and mitigation of performance issues. In this work, we propose an automated workflow that streamlines the performance evaluation and optimization of deployed deep learning models on edge devices. First, our approach automates the conversion of high-level code to optimized C/C++ code, seamlessly deploys it to the target device, and runs the performance benchmark to evaluate the code deployed. This automation saves time, reduces the likelihood of errors, and enables continuous evaluation of model performance. The benchmark incorporates the MLPerf Loadgen interface [1, 2] to evaluate metrics like latency and accuracy, so that we can effectively assess the impact of optimization techniques like pruning, quantization, and projection. The automation of this workflow allows developers to iteratively refine their models and strike the right balance between performance and resource constraints. Second, once a performance gap is identified, developers can use our in-house profiling tool to debug and determine the root cause of the performance issue. We showcase how this profiling tool can be used in the end-to-end development cycle, from the high-level code to the generated code running on the edge device. This tool provides visualization of code execution stacks in the form of a timeline, making it easy for developers to pinpoint the source code responsible for performance bottlenecks and regressions. By including both automated benchmarking and end-to-end profiling as integral parts of the development cycle, we can ensure development teams can easily meet and improve the quality of our final shipping product. The insights shared can be leveraged for other use cases, and we can use our collective knowledge to drive advancements in performance for tinyML applications.

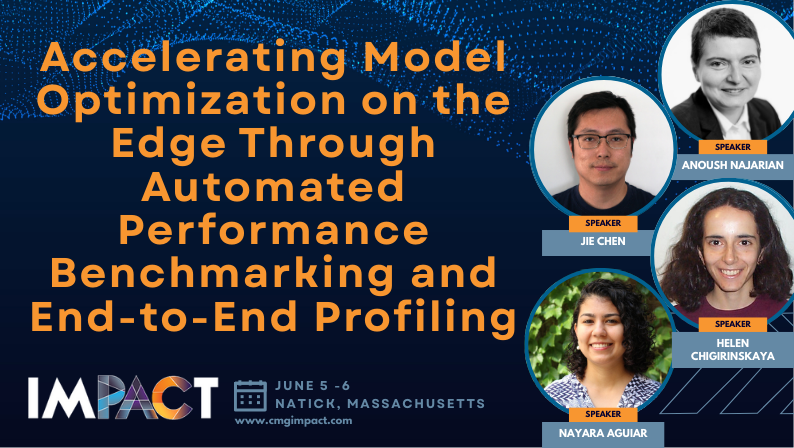

Anoush Najarian is a Software Engineering Manager at MathWorks where she leads a team focusing on performance engineering, and helps nurture grassroots DEI (diversity, equity inclusion) and Ethical AI efforts. Anoush’s team pioneered the AI-assisted coding work at MathWorks. Passionate about making the tech community welcoming to everyone, Anoush co-led and co-coached teams of hundreds of speakers and power TAs (teaching assistants), most of whom are women, to build highly technical (and fun!) workshops that have since been presented to thousands of participants around the world.

Anoush holds a Red Diploma from Yerevan State University in Applied Math/Computer Science. Anoush was awarded a fellowship for a non-degree graduate program in Economics by the US Department of State. Anoush holds two Master’s of Science degrees from the University of Illinois at Urbana-Champaign: in Math and in Computer Science.

Anoush served on the organizing committees of conferences focusing on computer science, performance engineering, and machine learning/AI research. Anoush is the Board Director and Past Chair of the Board of Directors of CMG (Computer Measurement Group) https://www.cmg.org/, a professional organization of performance engineers. Anoush is a conference committee member of ACM/SPEC International Conference on Performance Engineering (ICPE) https://icpe2025.spec.org/, reviewer for the workshop “Algorithmic Fairness through the Lens of Metrics and Evaluation” (AFME) https://www.afciworkshop.org/, and committee member for IEEE 7009.1 Standard for Safety Management of Autonomous and Semi-Autonomous Systems–Interventions in the Event of Anomalous Behavior https://standards.ieee.org/ieee/7009.1/11850/. Anoush served as the AI and Data Science Co-Chair of GHC (Grace Hopper Celebration) https://ghc.anitab.org/, the inaugural Virtual Co-Chair of ICML (International Conference on Machine Learning) https://icml.cc/, and Meetup Co-Chair of NeurIPS https://neurips.cc/. Anoush is the Senior Organizer of the WiML (Women in Machine Learning) Mentorship Program https://sites.google.com/view/wimlmentorship202425/. Anoush is on the 100 Brilliant Women in AI Ethics list; for more about Anoush’s background and involvement in Ethical AI, see: https://medium.com/women-in-ai-ethics/iamthefutureofai-anoush-najarian-49b13029dbca

Helen Chigirinskaya is a Senior Performance Engineer at MathWorks, focusing on benchmarking and performance analysis for Deep Learning workflows, including tool development to facilitate benchmarks development and automation. She has received her BA in Computer Science and Biology from Cornell University.

Nayara Aguiar is a Senior Performance Engineer at MathWorks, currently focusing on benchmarking and performance analysis for Deep Learning workflows. She has also contributed to software development projects on numerical optimization, data analysis, and applications related to the analysis of the electricity grid.

Nayara received her Ph.D. in Electrical Engineering from the University of Notre Dame, where her research interests were in the intersection of power systems, renewable energy, and economics. She has also volunteered in women in STEM initiatives throughout her career, having supported activities of affinity groups from the IEEE Women in Engineering (IEEE WIE), the Graduate Society of Women Engineers (GradSWE), and the Association for Women in Science (AWIS).

Nayara has participated as a speaker and panelist in different events, such as a workshop for the 2023 Women in Data Science (WiDS) Datathon, multiple conferences by the Computer Measurement Group (CMG), the 2024 Grace Hopper Celebration, and the tinyML Summit 2024. In these events, she presented hands-on AI workshops, spoke about deep learning applications related to sustainability, shared challenges related to performance benchmarking on embedded targets, and discussed green initiatives in the technology industry.

Jie Chen is a Principal Engineer and Team Lead at MathWorks, where his work focuses on application startup, cloud native performance, performance tooling, and data analytics. He received his PhD in Computer Engineering from George Washington University.

He serves as the chair for the Artificial Intelligence session at the IEEE International Symposium on Workload Characterization (IISWC), 2023. He serves as a publicity chair for the IEEE International Symposium on High-Performance Computer Architecture (HPCA), 2019. Dr. Chen holds one US patent, and his research work has been published in flagship conferences and journals such as IEEE/ACM MICRO, IEEE Micro Magazine, and Elsevier JPDC. He received IEEE Best Poster Award from the 20th International Conference on Parallel Architectures and Compilation Techniques (PACT), and his research work was selected as Best Papers from HotPower 2011.