June 5 - 6, 2025 | Now Virtual!

Important Update: IMPACT 2025 Is Going Virtual

Due to an unexpected system outage at our venue with no clear timeline for resolution, we have made the decision to move IMPACT 2025 to a fully virtual format. The event will still take place on the original dates, June 5–6, 2025.

We’re working closely with speakers to confirm their participation in the updated program and will provide access links and details to all registrants shortly.

We appreciate your understanding and look forward to welcoming you online for two days of impactful content and conversation.

CMG is a practitioner-led, vendor-neutral professional association for enterprise technology specialists. We host events and offer training, leadership opportunities, and membership for our community members, who value experience-based learning and personal connections with peers from both customer and vendor enterprises.

For over 45 years, CMG has been, and continues to be, the source for vendor-agnostic expert advice and information for IT professionals dedicated to managing the enterprise’s hardware, software, infrastructure, and computing systems across the enterprise.

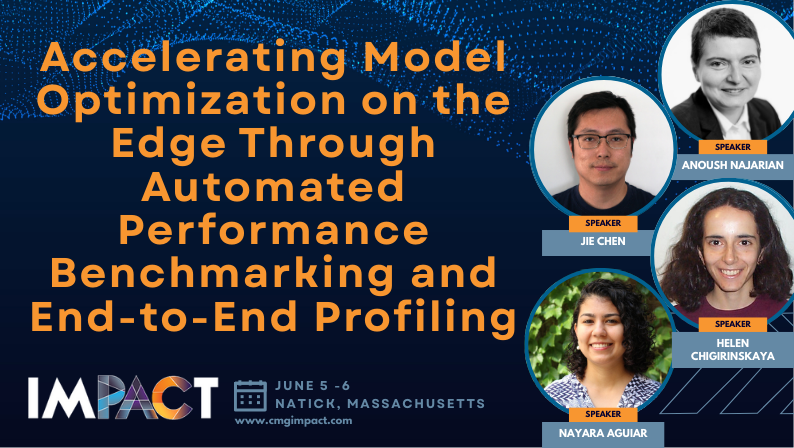

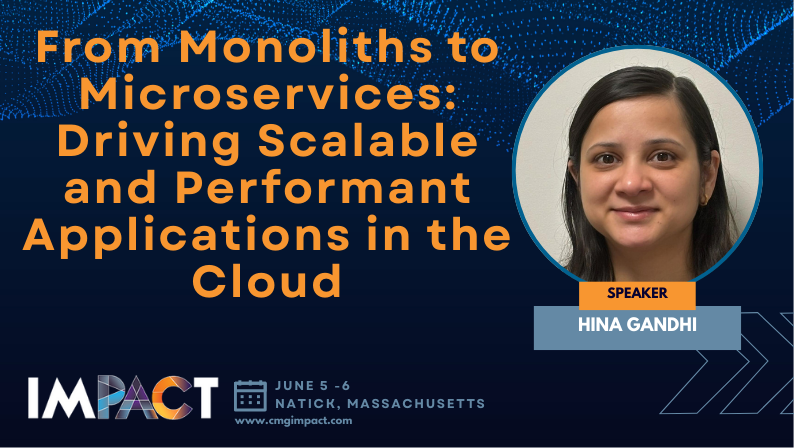

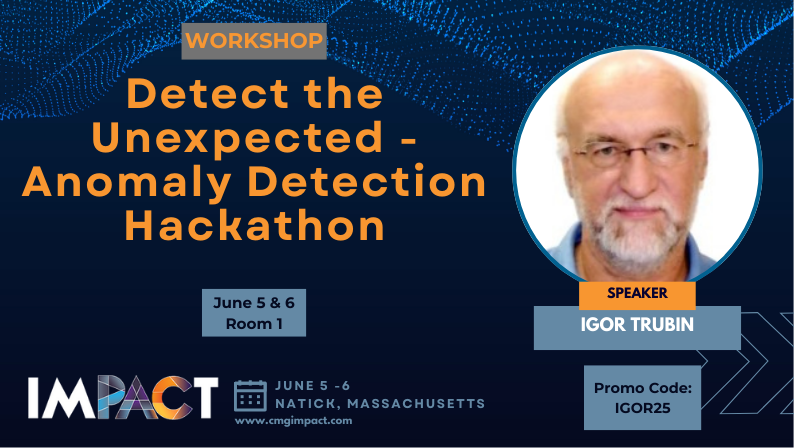

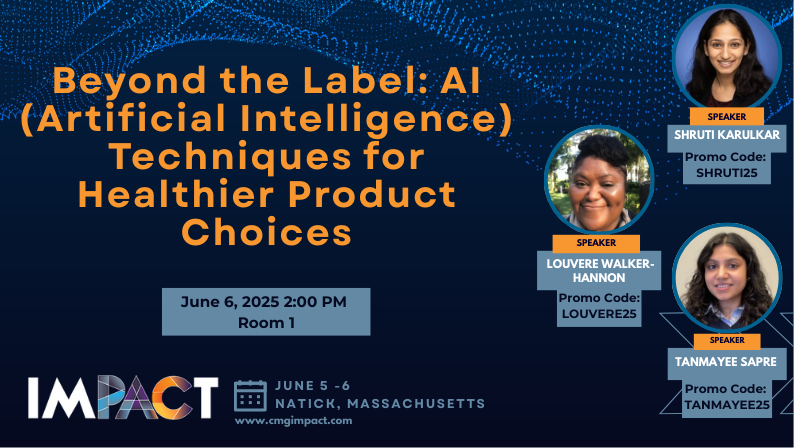

Sessions and Speakers

Just Announced:

Ready?

High Value Learning

Keynote and breakout sessions explore major trends in enterprise IT and digital transformation

Tech Demonstrations

Key opportunities to learn about real solutions and product offerings.

Networking & Exhibitor Sessions

Meet our speakers, sponsors, and other attendees through networking opportunities

Great Speakers

With an emphasis on peer-to-peer learning, attendees will learn actionable solutions from other industry experts

Exciting Giveaways

Participate in games, interactive sessions, and contests to win exciting giveaways

Live Learning

Engage in sessions at our in-person event in Natick, Massachusetts

We're now working with partners on bespoke sponsor participation packages.

Let's Reconnect in Natick, Massachusetts

IMPACT 2025 will take place in Natick, Massachusetts, a thriving hub of innovation and technology located just outside Boston. Known for its vibrant business community, Natick is home to leading companies and a growing ecosystem of cutting-edge industries. Conveniently situated in the heart of the Greater Boston area, Natick offers both prestige and accessibility.

IMPACT 2025 will be hosted at MathWorks, located at 1 Apple Hill Drive in Natick, Massachusetts. MathWorks, a global leader in mathematical computing software, is renowned for its cutting-edge innovations that power engineers and scientists in industries ranging from aerospace to finance.

The MathWorks campus reflects the company’s commitment to excellence, featuring state-of-the-art facilities designed to foster collaboration and creativity. Attendees will enjoy a modern, welcoming environment equipped with the latest technology, providing an ideal space for impactful discussions and learning. Situated in the vibrant town of Natick, the venue is easily accessible from Boston and surrounding areas, offering a perfect blend of professionalism and convenience.

Whether you’re attending for networking or deepening your knowledge, MathWorks provides a dynamic setting that inspires innovation and growth. 2025

For attendees of IMPACT 2025 at MathWorks in Natick, Massachusetts, there are several nearby accommodations to suit various preferences and budgets. Here are some options to consider:

MathWorks, located at 1 Apple Hill Drive in Natick, Massachusetts, is easily accessible by car and public transportation. For those traveling by car, the venue is conveniently situated near major routes, including the Massachusetts Turnpike (I-90), with ample parking available on-site. If arriving by public transit, the nearby Natick Center MBTA Commuter Rail station offers connections to Boston and surrounding areas, making it an accessible option for attendees. Once in Natick, getting around is straightforward with rideshare services like Uber and Lyft readily available. The town also boasts a variety of local amenities, from shopping at the Natick Mall to dining at nearby restaurants, ensuring visitors have everything they need within close reach.

Early Bird Full Registration (Extended to May 16): $199

Regular Full Registration: $299